👋 Hey there, Pedma here! Welcome to the 🔒 exclusive subscriber edition 🔒 of Trading Research Hub’s Newsletter. Each week, I release a new research article with a trading strategy, its code, and much more.

Join over 5K+ readers of the newsletter!

Hey everyone!

Have you ever developed a trading system, and wondered if it’s really going to make you money once deployed in the market?

Wait no more!!

After this article…

… you still WON’T know for sure!

And that’s the beauty of being a trader.

The best we can do, is tilt the odds in our favor.

There are no perfect solutions, and for sure I am not claiming to have them.

In our systematic trading “niche”, there’s a lot of talk about making sure that our models are as robust as we can make them.

And that’s a very valid conversation.

Like any smart business, we can ensure that they have the best odds to succeed once deployed.

It’s all about stacking the odds in our favor.

Today we will be going through some of these robustness validation techniques.

At least those that I’ve found useful over the years.

Yes we can nerd out on this stuff, but at some point, it has diminishing returns, and we have to deploy our model, to know for sure if it will work or not.

If you follow me for sometime, you know that I like to keep things simple and aim for the largest impact as possible.

There’s tons of stuff online, but not all is useful.

At the end of the day, the best robustness technique, will be your own intuition about what should work.

And this intuition is only built over time…

…. through careful thinking about markets and actual practice of the trade.

But as researchers, we can add some “science” to the madness.

There’s certain things I like to see checked out, before considering that a model is ready for deployment.

That’s what we’ll be looking into in today’s article!

Index

Introduction

Index

The Idea Behind Robustness Validation Techniques

Sharpe’s Statistical Significance

In-Sample and Out-Sample Testing

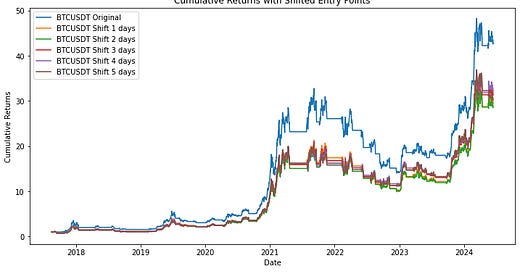

Shifting Dataset

Entry Point Sensitivity Analysis

Intra-Bar Entry Point Sensitivity Analysis

Slippage Sensitivity Analysis

Parameter Stability

Python Code

Conclusion

The Idea Behind Robustness Validation Techniques

There’s tons of bad ideas out there.

Just because something works in a backtest, is not indicative that will work in the real market environment.

Imagine that you design new cars.

You think that the best test for that new car is in the garage? Or in real hard conditions, that simulate real roads?

The road will show you if the car has a good chance of performing as you intended.

And even if you do all the proper testing on the road, you still don’t know for sure if it will work for the millions of variations of problems, that may arise from active driving.

Only the usage of the car, in real environments on a day to day basis, will be the true test.

Same with a trading model.

As we’ve seen in previous articles, we can make the data tell us anything that we want as long as we “beat” it long enough.

However, what if we introduce some stress into our models? How will they perform then?

That’s the whole goal of validation.

We want to break the model. We want to break the underlying reliance, on the future looking very similar to our historical data.

If a model depends too much on being executed at the perfect time, perfect price, with low costs, than you are being too optimistic about the model.

Things break, prices get out of whack, exchanges have delays, costs can increase unexpectedly.

Plan for those, model for those, and you have less chances of being surprised.

At least you will know how your model should perform, given this new set of real feedback from the market.

Ok, let’s get to what you all are here for.

What are some tests, that we can do, to give us better chances?

Let’s begin by the common basic concepts, that we need to keep in check, before we go into more advanced stuff.